MPI library interfaces. More...

Data Types | |

| interface | mpi_mod_allgather |

| interface | mpi_mod_allgatherv_in_place |

| interface | mpi_mod_bcast |

| interface | mpi_mod_file_read_cfp |

| interface | mpi_mod_file_set_view |

| interface | mpi_mod_file_write |

| interface | mpi_mod_gatherv |

| interface | mpi_mod_isend |

| interface | mpi_mod_recv |

| interface | mpi_mod_recv_dynamic |

| interface | mpi_mod_rotate_arrays_around_ring |

| interface | mpi_mod_rotate_cfp_arrays_around_ring |

| interface | mpi_mod_scan_sum |

| interface | mpi_mod_send |

| interface | mpi_mod_send_dynamic |

| interface | mpi_reduce_inplace_sum_cfp |

| interface | mpi_reduce_sum |

| interface | mpi_reduceall_inplace_sum_cfp |

| interface | mpi_reduceall_max |

| interface | mpi_reduceall_min |

| interface | mpi_reduceall_sum_cfp |

| type | MPIBlockArray |

| Convenience MPI type wrapper with garbage collection. More... | |

| interface | naive_mpi_reduce_inplace_sum |

Functions/Subroutines | |

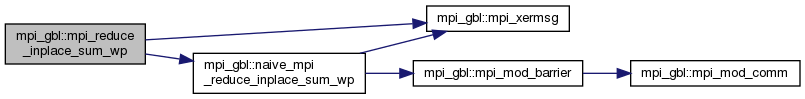

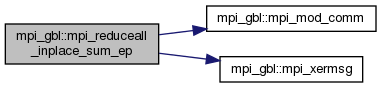

| integer(mpiint) function | mpi_mod_comm (comm_opt) |

| Comm or default comm. More... | |

| subroutine | mpi_mod_rank (rank, comm) |

| Wrapper around MPI_Comm_rank. More... | |

| subroutine | mpi_mod_barrier (error, comm) |

| Interface to the routine MPI_BARRIER. More... | |

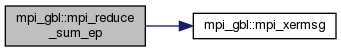

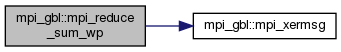

| subroutine | mpi_xermsg (mod_name, routine_name, err_msg, err, level) |

| Analogue of the xermsg routine. This routine uses xermsg to first print out the error message and then either aborts the program or exits the routine depending on the level of the message (warning/error) which is defined in the same way as in xermsg. More... | |

| subroutine | check_mpi_running |

| This is a lightweight routine which aborts the program if MPI is not found running. More... | |

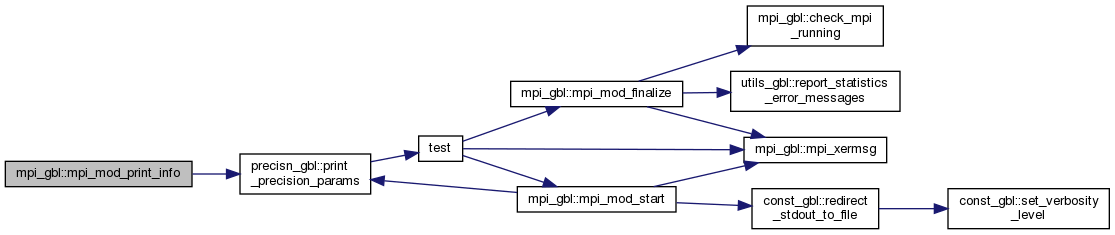

| subroutine | mpi_mod_print_info (u) |

| Display information about current MPI setup. More... | |

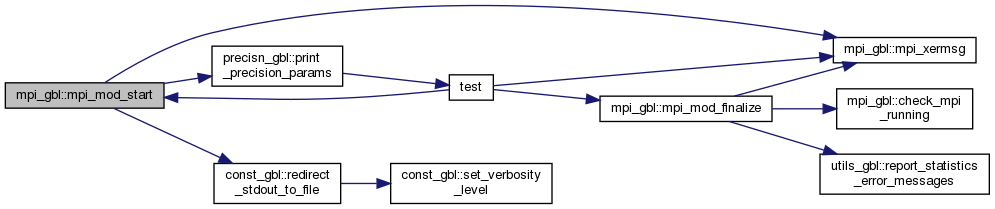

| subroutine | mpi_mod_start (do_stdout, allow_shared_memory) |

| Initializes MPI, assigns the rank for each process and finds out how many processors we're using. It also maps the current floating point precision (kind=cfp) to the corresponding MPI numeric type. This routine also sets the unit for standard output that each rank will use (the value of stdout). In case of serial run the value stdout is not set here and is left to the default value input_unit as specified in the module const. More... | |

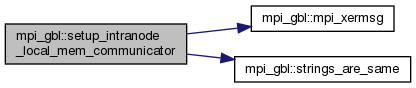

| subroutine | setup_intranode_local_mem_communicator |

| It is not used anywhere in the code yet but it might become useful later on. It creates intra-node communicators without the memory sharing capability. More... | |

| logical function | strings_are_same (str1, str2, length) |

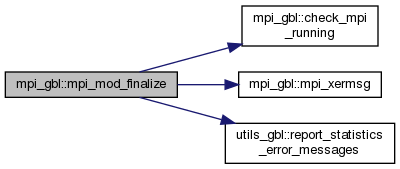

| subroutine | mpi_mod_finalize |

| Terminates the MPI session and stops the program. It is a blocking routine. More... | |

| subroutine | mpi_mod_create_block_array (this, n, elemtype) |

| Set up a new MPI type describing a long array composed of blocks. More... | |

| subroutine | mpi_mod_free_block_array (this) |

| Release MPI handle of the block array type. More... | |

| subroutine | mpi_mod_bcast_logical (val, from, comm) |

| subroutine | mpi_mod_bcast_int32 (val, from, comm) |

| subroutine | mpi_mod_bcast_int64 (val, from, comm) |

| subroutine | mpi_mod_bcast_int32_array (val, from, comm) |

| subroutine | mpi_mod_bcast_int64_array (val, from, comm) |

| subroutine | mpi_mod_bcast_int32_3d_array (val, from, comm) |

| subroutine | mpi_mod_bcast_int64_3d_array (val, from, comm) |

| subroutine | mpi_mod_bcast_wp (val, from, comm) |

| subroutine | mpi_mod_bcast_ep (val, from, comm) |

| subroutine | mpi_mod_bcast_wp_array (val, from, comm) |

| subroutine | mpi_mod_bcast_ep_array (val, from, comm) |

| subroutine | mpi_mod_bcast_wp_3d_array (val, from, comm) |

| subroutine | mpi_mod_bcast_ep_3d_array (val, from, comm) |

| subroutine | mpi_mod_bcast_character (val, from, comm) |

| subroutine | mpi_mod_bcast_character_array (val, from, comm) |

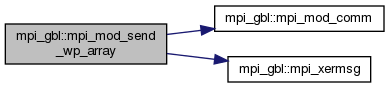

| subroutine | mpi_mod_send_wp_array (to, buffer, tag, n, comm) |

| subroutine | mpi_mod_send_ep_array (to, buffer, tag, n, comm) |

| subroutine | mpi_mod_send_int32_array (to, buffer, tag, n, comm) |

| subroutine | mpi_mod_send_int64_array (to, buffer, tag, n, comm) |

| subroutine | mpi_mod_isend_int32_array (to, buffer, tag, n, comm) |

| subroutine | mpi_mod_isend_int64_array (to, buffer, tag, n, comm) |

| subroutine | mpi_mod_recv_wp_array (from, tag, buffer, n, comm) |

| subroutine | mpi_mod_recv_ep_array (from, tag, buffer, n, comm) |

| subroutine | mpi_mod_recv_int32_array (from, tag, buffer, n, comm) |

| subroutine | mpi_mod_recv_int64_array (from, tag, buffer, n, comm) |

| subroutine | mpi_mod_send_wp_array_dynamic (to, buffer, tag, comm) |

| subroutine | mpi_mod_send_ep_array_dynamic (to, buffer, tag, comm) |

| subroutine | mpi_mod_recv_wp_array_dynamic (from, tag, buffer, n, comm) |

| subroutine | mpi_mod_recv_ep_array_dynamic (from, tag, buffer, n, comm) |

| subroutine | mpi_mod_file_open_read (filename, fh, ierr, comm) |

| subroutine | mpi_mod_file_set_view_wp (fh, disp, ierr, wp_dummy) |

| Sets view for a file containing floating point numbers with kind=wp. More... | |

| subroutine | mpi_mod_file_set_view_ep (fh, disp, ierr, ep_dummy) |

| Sets view for a file containing floating point numbers with kind=ep. More... | |

| subroutine | mpi_mod_file_read_wp (fh, buffer, buflen, ierr) |

| subroutine | mpi_mod_file_read_ep (fh, buffer, buflen, ierr) |

| subroutine | mpi_mod_file_close (fh, ierr) |

| subroutine | mpi_reduce_inplace_sum_wp (buffer, nelem) |

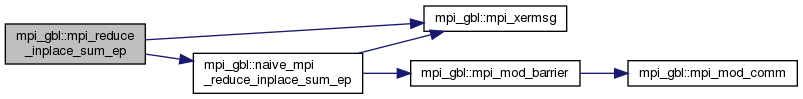

| subroutine | mpi_reduce_inplace_sum_ep (buffer, nelem) |

| subroutine | mpi_reduce_sum_wp (src, dest, nelem) |

| subroutine | mpi_reduce_sum_ep (src, dest, nelem) |

| subroutine | mpi_reduceall_sum_wp (src, dest, nelem, comm) |

| subroutine | mpi_reduceall_sum_ep (src, dest, nelem, comm) |

| subroutine | mpi_reduceall_max_int32 (src, dest, comm_opt) |

| Choose largest elements among processes from 32-bit integer arrays. More... | |

| subroutine | mpi_reduceall_max_int64 (src, dest, comm_opt) |

| Choose largest elements among processes from 64-bit integer arrays. More... | |

| subroutine | mpi_reduceall_min_int32 (src, dest, comm_opt) |

| Choose smallest elements among processes from 32-bit integer arrays. More... | |

| subroutine | mpi_reduceall_min_int64 (src, dest, comm_opt) |

| Choose smallest elements among processes from 64-bit integer arrays. More... | |

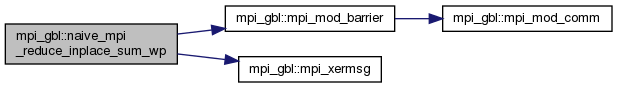

| subroutine | naive_mpi_reduce_inplace_sum_wp (buffer, nelem, split) |

| subroutine | naive_mpi_reduce_inplace_sum_ep (buffer, nelem, split) |

| subroutine | mpi_reduceall_inplace_sum_wp (buffer, nelem, comm) |

| subroutine | mpi_reduceall_inplace_sum_ep (buffer, nelem, comm) |

| subroutine | mpi_mod_rotate_arrays_around_ring_wp (elem_count, int_array, wp_array, max_num_elements, comm_opt) |

| This will rotate a combination of the number of elements, an integer array and float array once around in a ring formation. More... | |

| subroutine | mpi_mod_rotate_arrays_around_ring_ep (elem_count, int_array, ep_array, max_num_elements, comm_opt) |

| This will rotate a combination of the number of elements, an integer array and float array once around in a ring formation. More... | |

| subroutine | mpi_mod_rotate_wp_arrays_around_ring (elem_count, wp_array, max_num_elements, communicator) |

| This will rotate a combination of the number of elements, an integer array and float array once around in a ring formation. More... | |

| subroutine | mpi_mod_rotate_ep_arrays_around_ring (elem_count, ep_array, max_num_elements, communicator) |

| This will rotate a combination of the number of elements, an integer array and float array once around in a ring formation. More... | |

| subroutine | mpi_mod_rotate_int_arrays_around_ring (elem_count, int_array, max_num_elements, communicator) |

| This will rotate a combination of the number of elements, an integer array and float array once around in a ring formation. More... | |

| subroutine | mpi_mod_allgather_int32 (send, receive, comm) |

| All gather for one integer send from every process. More... | |

| subroutine | mpi_mod_allgather_int64 (send, receive, comm) |

| subroutine | mpi_mod_allgatherv_in_place_int32_array (sendcount, receive, comm) |

| subroutine | mpi_mod_allgatherv_in_place_int64_array (sendcount, receive, comm) |

| subroutine | mpi_mod_allgatherv_in_place_real64_array (sendcount, receive, comm) |

| subroutine | mpi_mod_allgatherv_in_place_real128_array (sendcount, receive, comm) |

| subroutine | mpi_mod_allgather_character (send, receive, comm) |

| All gather for one string of length MPI_MAX_PROCESSOR_NAME send from every process. More... | |

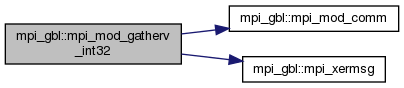

| subroutine | mpi_mod_gatherv_int32 (sendbuf, recvbuf, recvcounts, displs, to, comm) |

| Gather for 32bit integer array send from every process and received on a given rank. More... | |

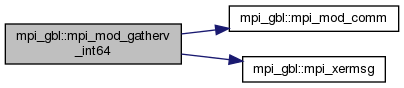

| subroutine | mpi_mod_gatherv_int64 (sendbuf, recvbuf, recvcounts, displs, to, comm) |

| Gather for 64bit integer array send from every process and received on a given rank. More... | |

| subroutine | mpi_mod_gatherv_real64 (sendbuf, recvbuf, recvcounts, displs, to, comm) |

| Gather for 64bit float array send from every process and received on a given rank. More... | |

| subroutine | mpi_mod_gatherv_real128 (sendbuf, recvbuf, recvcounts, displs, to, comm) |

| Gather for 128bit float array send from every process and received on a given rank. More... | |

| real(wp) function | mpi_mod_wtime () |

| Interface to the routine MPI_BARRIER. More... | |

| subroutine | mpi_mod_file_open_write (filename, fh, ierr, comm) |

| subroutine | mpi_mod_file_write_int32 (fh, n) |

| Let master process write one 4-byte integer to stream file. More... | |

| subroutine | mpi_mod_file_write_array1d_int32 (fh, array, length) |

| Let master process write array of 4-byte integers to stream file. More... | |

| subroutine | mpi_mod_file_write_array2d_int32 (fh, array, length1, length2) |

| Let master process write 2d array of 4-byte integers to stream file. More... | |

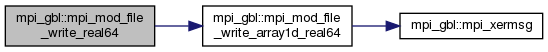

| subroutine | mpi_mod_file_write_real64 (fh, x) |

| Let master process write an 8-byte real to stream file. More... | |

| subroutine | mpi_mod_file_write_array1d_real64 (fh, array, length) |

| Let master process write array of 8-byte reals to stream file. More... | |

| subroutine | mpi_mod_file_write_array2d_real64 (fh, array, length1, length2) |

| Let master process write 2d array of 8-byte reals to stream file. More... | |

| subroutine | mpi_mod_file_write_array3d_real64 (fh, array, length1, length2, length3) |

| Let master process write 3d array of 8-byte reals to stream file. More... | |

| subroutine | mpi_mod_file_set_size (fh, sz) |

| Collectively resize file. More... | |

| subroutine | mpi_mod_file_write_darray2d_real64 (fh, m, n, nprow, npcol, rb, cb, locA, myrows, mycols, comm) |

| Write block-cyclically distributed matrix to a stream file. More... | |

| subroutine | mpi_mod_scan_sum_int32 (src, dst, comm_opt) |

| Wrapper aroung MPI_Scan. More... | |

| subroutine | mpi_mod_scan_sum_int64 (src, dst, comm_opt) |

| Wrapper aroung MPI_Scan. More... | |

Variables | |

| integer, parameter, public | mpiint = kind(0) |

| integer, parameter, public | mpiaddr = longint |

| integer, parameter, public | mpicnt = longint |

| integer, parameter, public | mpiofs = longint |

| logical, public, protected | mpi_running = .false. |

| This can be set only once at the beginning of the program run by a call to MPI_MOD_START. The value of this variable is accessed by MPI_MOD_CHECK which aborts the program if MPI is not running. This variable stays .false. if the library was compiled without MPI support. More... | |

| logical, public, protected | mpi_started = .false. |

| This is similar to mpi_running, but will be always set to true once MPI_MOD_START is called, whether or not the library was built with MPI support. So, while mpi_running can be used to determine whether the program is running under MPI, the variable mpi_started indicates whether the call to MPI_MOD_START had been already done (even in serial build). More... | |

| integer(kind=mpiint), public, protected | nprocs = -1 |

| Total number of processes. Set by mpi_mod_start. More... | |

| integer(kind=mpiint), public, protected | myrank = -1 |

| The local process number (rank). Set by mpi_mod_start. More... | |

| integer(kind=mpiint), public, protected | local_nprocs = 1 |

| Total number of processes on the node this task is bound to. Set by mpi_mod_start. More... | |

| integer(kind=mpiint), public, protected | local_rank = 0 |

| The local process number (rank) on the node this task is bound to. Set by mpi_mod_start. More... | |

| logical, public, protected | shared_enabled = .false. |

| The routine mpi_start creates the shared_communicator which groups all tasks sitting on the same node. If we're using MPI 3.0 standard the shared_communicator enables creation of shared memory areas. In this case shared_enabled is set to .true. If we're not using MPI 3.0 then shared_enabled is set to .false. More... | |

| integer(kind=mpiint), parameter, public | master = 0 |

| ID of the master process. More... | |

| integer, public, protected | max_data_count = huge(dummy_32bit_integer) |

| Largest integer (e.g. number of elements) supported by the standard MPI 32-bit integer API. More... | |

| character(len=mpi_max_processor_name), parameter, public, protected | procname = "N/A" |

| Name of the processor on which the current process is running. More... | |

| integer(kind=mpiint), public, protected | shared_communicator = -1 |

| Intra-node communicator created by mpi_mod_start. More... | |

Detailed Description

MPI library interfaces.

- Date

- 2014 - 2019

Convenience generic interfaces for MPI calls. Specific implementations available for 4-byte and 8-byte integers and 8-byte and 16-byte real data types.

- Warning

- This module is also used by SCATCI but always in DOUBLE PRECISION. However, SCATCI must always be linked to the integral library of precision used to calculate the integrals file. Therefore, in order to ensure correct functionality of SCATCI we need to ensure this module supports calls to both DOUBLE and QUAD precision MPI routines within the same program run. This is achieved by interfacing all routines manipulating floating point variables for both wp and ep precisions.

Function/Subroutine Documentation

◆ check_mpi_running()

| subroutine mpi_gbl::check_mpi_running |

This is a lightweight routine which aborts the program if MPI is not found running.

◆ mpi_mod_allgather_character()

| subroutine mpi_gbl::mpi_mod_allgather_character | ( | character(len=*), intent(in) | send, |

| character(len=*), dimension(:), intent(out) | receive, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

All gather for one string of length MPI_MAX_PROCESSOR_NAME send from every process.

◆ mpi_mod_allgather_int32()

| subroutine mpi_gbl::mpi_mod_allgather_int32 | ( | integer(int32), intent(in) | send, |

| integer(int32), dimension(:), intent(out) | receive, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

All gather for one integer send from every process.

◆ mpi_mod_allgather_int64()

| subroutine mpi_gbl::mpi_mod_allgather_int64 | ( | integer(int64), intent(in) | send, |

| integer(int64), dimension(:), intent(out) | receive, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_allgatherv_in_place_int32_array()

| subroutine mpi_gbl::mpi_mod_allgatherv_in_place_int32_array | ( | integer(mpiint), intent(in) | sendcount, |

| integer(int32), dimension(:), intent(inout) | receive, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_allgatherv_in_place_int64_array()

| subroutine mpi_gbl::mpi_mod_allgatherv_in_place_int64_array | ( | integer(mpiint), intent(in) | sendcount, |

| integer(int64), dimension(:), intent(inout) | receive, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_allgatherv_in_place_real128_array()

| subroutine mpi_gbl::mpi_mod_allgatherv_in_place_real128_array | ( | integer(mpiint), intent(in) | sendcount, |

| real(real128), dimension(:), intent(inout) | receive, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_allgatherv_in_place_real64_array()

| subroutine mpi_gbl::mpi_mod_allgatherv_in_place_real64_array | ( | integer(mpiint), intent(in) | sendcount, |

| real(real64), dimension(:), intent(inout) | receive, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_barrier()

| subroutine mpi_gbl::mpi_mod_barrier | ( | integer, intent(out) | error, |

| integer(mpiint), intent(in), optional | comm | ||

| ) |

Interface to the routine MPI_BARRIER.

◆ mpi_mod_bcast_character()

| subroutine mpi_gbl::mpi_mod_bcast_character | ( | character(len=*), intent(inout) | val, |

| integer(mpiint), intent(in) | from, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_bcast_character_array()

| subroutine mpi_gbl::mpi_mod_bcast_character_array | ( | character(len=*), dimension(:), intent(inout) | val, |

| integer(mpiint), intent(in) | from, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_bcast_ep()

| subroutine mpi_gbl::mpi_mod_bcast_ep | ( | real(ep), intent(inout) | val, |

| integer(mpiint), intent(in) | from, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_bcast_ep_3d_array()

| subroutine mpi_gbl::mpi_mod_bcast_ep_3d_array | ( | real(ep), dimension(:,:,:), intent(inout) | val, |

| integer(mpiint), intent(in) | from, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_bcast_ep_array()

| subroutine mpi_gbl::mpi_mod_bcast_ep_array | ( | real(ep), dimension(:), intent(inout) | val, |

| integer(mpiint), intent(in) | from, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_bcast_int32()

| subroutine mpi_gbl::mpi_mod_bcast_int32 | ( | integer(int32), intent(inout) | val, |

| integer(mpiint), intent(in) | from, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_bcast_int32_3d_array()

| subroutine mpi_gbl::mpi_mod_bcast_int32_3d_array | ( | integer(int32), dimension(:,:,:), intent(inout) | val, |

| integer(mpiint), intent(in) | from, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_bcast_int32_array()

| subroutine mpi_gbl::mpi_mod_bcast_int32_array | ( | integer(int32), dimension(:), intent(inout) | val, |

| integer(mpiint), intent(in) | from, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_bcast_int64()

| subroutine mpi_gbl::mpi_mod_bcast_int64 | ( | integer(int64), intent(inout) | val, |

| integer(mpiint), intent(in) | from, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_bcast_int64_3d_array()

| subroutine mpi_gbl::mpi_mod_bcast_int64_3d_array | ( | integer(int64), dimension(:,:,:), intent(inout) | val, |

| integer(mpiint), intent(in) | from, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_bcast_int64_array()

| subroutine mpi_gbl::mpi_mod_bcast_int64_array | ( | integer(int64), dimension(:), intent(inout) | val, |

| integer(mpiint), intent(in) | from, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_bcast_logical()

| subroutine mpi_gbl::mpi_mod_bcast_logical | ( | logical, intent(inout) | val, |

| integer(mpiint), intent(in) | from, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_bcast_wp()

| subroutine mpi_gbl::mpi_mod_bcast_wp | ( | real(wp), intent(inout) | val, |

| integer(mpiint), intent(in) | from, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_bcast_wp_3d_array()

| subroutine mpi_gbl::mpi_mod_bcast_wp_3d_array | ( | real(wp), dimension(:,:,:), intent(inout) | val, |

| integer(mpiint), intent(in) | from, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_bcast_wp_array()

| subroutine mpi_gbl::mpi_mod_bcast_wp_array | ( | real(wp), dimension(:), intent(inout) | val, |

| integer(mpiint), intent(in) | from, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_comm()

Comm or default comm.

- Date

- 2019

Auxiliary function used in other subroutines for brevity. If an argument is given, it will return its value. If no argument is given, the function returns MPI_COMM_WORLD.

◆ mpi_mod_create_block_array()

| subroutine mpi_gbl::mpi_mod_create_block_array | ( | class(mpiblockarray), intent(inout) | this, |

| integer, intent(in) | n, | ||

| integer(mpiint), intent(in) | elemtype | ||

| ) |

Set up a new MPI type describing a long array composed of blocks.

- Date

- 2019

Creates a MPI type handle describing a structured data type that can be used to transfer very long arrays of data. The array is represented as a set of blocks, whose element count does not exceed the maximal value of the MPI integer type. To avoid resource leaks, the returned handle should be released by a call to MPI_Type_free.

- Parameters

-

[in] this Block array object to initialize. [in] n Number of elements in the array. [in] elemtype MPI datatype handle of elements of the array.

◆ mpi_mod_file_close()

| subroutine mpi_gbl::mpi_mod_file_close | ( | integer(mpiint), intent(inout) | fh, |

| integer, intent(out) | ierr | ||

| ) |

◆ mpi_mod_file_open_read()

| subroutine mpi_gbl::mpi_mod_file_open_read | ( | character(len=*), intent(in) | filename, |

| integer(mpiint), intent(out) | fh, | ||

| integer, intent(out) | ierr, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_file_open_write()

| subroutine mpi_gbl::mpi_mod_file_open_write | ( | character(len=*), intent(in) | filename, |

| integer(mpiint), intent(out) | fh, | ||

| integer, intent(out) | ierr, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_file_read_ep()

| subroutine mpi_gbl::mpi_mod_file_read_ep | ( | integer, intent(in) | fh, |

| real(kind=ep1), dimension(:), intent(out) | buffer, | ||

| integer, intent(in) | buflen, | ||

| integer, intent(out) | ierr | ||

| ) |

◆ mpi_mod_file_read_wp()

| subroutine mpi_gbl::mpi_mod_file_read_wp | ( | integer, intent(in) | fh, |

| real(kind=wp), dimension(:), intent(out) | buffer, | ||

| integer, intent(in) | buflen, | ||

| integer, intent(out) | ierr | ||

| ) |

◆ mpi_mod_file_set_size()

| subroutine mpi_gbl::mpi_mod_file_set_size | ( | integer(mpiint), intent(in) | fh, |

| integer, intent(in) | sz | ||

| ) |

Collectively resize file.

- Date

- 2019

Change size of a file; mostly used to truncate the file to zero size. Only available when compiled with MPI.

- Parameters

-

[in] fh File handle as returned by mpi_mod_file_open_*. [in] sz New size of the file.

◆ mpi_mod_file_set_view_ep()

| subroutine mpi_gbl::mpi_mod_file_set_view_ep | ( | integer, intent(in) | fh, |

| integer, intent(in) | disp, | ||

| integer, intent(out) | ierr, | ||

| real(kind=ep), intent(in) | ep_dummy | ||

| ) |

Sets view for a file containing floating point numbers with kind=ep.

◆ mpi_mod_file_set_view_wp()

| subroutine mpi_gbl::mpi_mod_file_set_view_wp | ( | integer, intent(in) | fh, |

| integer, intent(in) | disp, | ||

| integer, intent(out) | ierr, | ||

| real(kind=wp), intent(in) | wp_dummy | ||

| ) |

Sets view for a file containing floating point numbers with kind=wp.

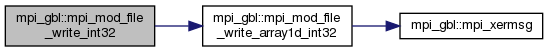

◆ mpi_mod_file_write_array1d_int32()

| subroutine mpi_gbl::mpi_mod_file_write_array1d_int32 | ( | integer(mpiint), intent(in) | fh, |

| integer(int32), dimension(length), intent(in) | array, | ||

| integer, intent(in) | length | ||

| ) |

Let master process write array of 4-byte integers to stream file.

- Date

- 2019

Uses blocking collective MPI output routine to write array of integers. Only master process will do the writing, other processes behave as if the provided length parameter was zero. The integers are written at the master's file position, which gets updated. Positions of other processes in the file remain as they were.

- Parameters

-

[in] fh File handle as returned by mpi_mod_file_open_*. [in] array Integer array to write. [in] length Number of elements in the array.

◆ mpi_mod_file_write_array1d_real64()

| subroutine mpi_gbl::mpi_mod_file_write_array1d_real64 | ( | integer(mpiint), intent(in) | fh, |

| real(real64), dimension(length), intent(in) | array, | ||

| integer, intent(in) | length | ||

| ) |

Let master process write array of 8-byte reals to stream file.

- Date

- 2019

Uses blocking collective MPI output routine to write array of reals. Only master process will do the writing, other processes behave as if the provided length parameter was zero. The reals are written at the master's file position, which gets updated. Positions of other processes in the file remain as they were.

- Parameters

-

[in] fh File handle as returned by mpi_mod_file_open_*. [in] array Real array to write. [in] length Number of elements in the array.

◆ mpi_mod_file_write_array2d_int32()

| subroutine mpi_gbl::mpi_mod_file_write_array2d_int32 | ( | integer(mpiint), intent(in) | fh, |

| integer(int32), dimension(length1, length2), intent(in) | array, | ||

| integer, intent(in) | length1, | ||

| integer, intent(in) | length2 | ||

| ) |

Let master process write 2d array of 4-byte integers to stream file.

- Date

- 2019

Convenience wrapper around mpi_mod_file_write_array1d_int32, which does the real work.

- Parameters

-

[in] fh File handle as returned by mpi_mod_file_open_*. [in] array Two-dimensional integer array to write. [in] length1 Number of rows (= leading dimension). [in] length2 Number of columns.

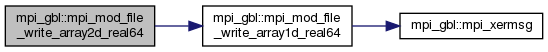

◆ mpi_mod_file_write_array2d_real64()

| subroutine mpi_gbl::mpi_mod_file_write_array2d_real64 | ( | integer(mpiint), intent(in) | fh, |

| real(real64), dimension(length1, length2), intent(in) | array, | ||

| integer, intent(in) | length1, | ||

| integer, intent(in) | length2 | ||

| ) |

Let master process write 2d array of 8-byte reals to stream file.

- Date

- 2019

Convenience wrapper around mpi_mod_file_write_array1d_real64, which does the real work.

- Parameters

-

[in] fh File handle as returned by mpi_mod_file_open_*. [in] array Two-dimensional real array to write. [in] length1 Number of rows (= leading dimension). [in] length2 Number of columns.

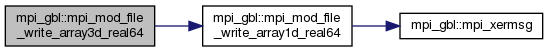

◆ mpi_mod_file_write_array3d_real64()

| subroutine mpi_gbl::mpi_mod_file_write_array3d_real64 | ( | integer(mpiint), intent(in) | fh, |

| real(real64), dimension(length1, length2, length3), intent(in) | array, | ||

| integer, intent(in) | length1, | ||

| integer, intent(in) | length2, | ||

| integer, intent(in) | length3 | ||

| ) |

Let master process write 3d array of 8-byte reals to stream file.

- Date

- 2019

Convenience wrapper around mpi_mod_file_write_array1d_real64, which does the real work.

- Parameters

-

[in] fh File handle as returned by mpi_mod_file_open_*. [in] array Three-dimensional real array to write. [in] length1 Leading dimension. [in] length2 Next dimension. [in] length3 Next dimension.

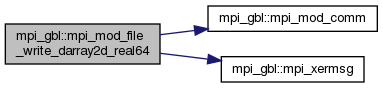

◆ mpi_mod_file_write_darray2d_real64()

| subroutine mpi_gbl::mpi_mod_file_write_darray2d_real64 | ( | integer(mpiint), intent(in) | fh, |

| integer, intent(in) | m, | ||

| integer, intent(in) | n, | ||

| integer, intent(in) | nprow, | ||

| integer, intent(in) | npcol, | ||

| integer, intent(in) | rb, | ||

| integer, intent(in) | cb, | ||

| real(real64), dimension(myrows, mycols), intent(in) | locA, | ||

| integer, intent(in) | myrows, | ||

| integer, intent(in) | mycols, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

Write block-cyclically distributed matrix to a stream file.

- Date

- 2019

Assumes ScaLAPACK-compatible row-major (!) block-cyclic distribution. Writes the data at the master process file position, which it first broadcasts to other processes.

- Warning

- At the moment, there is a limitation on the element count of the local portion of the distributed matrix, which mustn't exceed 2**31 elements (i.e. 16 GiB).

- Parameters

-

[in] fh File handle as returned by mpi_mod_file_open_*. [in] m Number of rows in the distributed matrix. [in] n Number of columns in the distributed matrix. [in] nprow Number of rows in the process grid. [in] npcol Number of columns in the process grid. [in] rb Block row count. [in] cb Block column column. [in] locA Local portion of the distributed matrix. [in] myrows Number of rows in locA. [in] mycols Number of columns in locA. [in] comm_opt Optional communicator on which the file is shared (MPI world used if not provided).

◆ mpi_mod_file_write_int32()

| subroutine mpi_gbl::mpi_mod_file_write_int32 | ( | integer(mpiint), intent(in) | fh, |

| integer(int32), intent(in) | n | ||

| ) |

Let master process write one 4-byte integer to stream file.

- Date

- 2019

Convenience wrapper around mpi_mod_file_write_array1d_int32, which does the real work.

- Parameters

-

[in] fh File handle as returned by mpi_mod_file_open_*. [in] n Integer to write.

◆ mpi_mod_file_write_real64()

| subroutine mpi_gbl::mpi_mod_file_write_real64 | ( | integer(mpiint), intent(in) | fh, |

| real(real64), intent(in) | x | ||

| ) |

Let master process write an 8-byte real to stream file.

- Date

- 2019

Convenience wrapper around mpi_mod_file_write_array1d_real64, which does the real work.

- Parameters

-

[in] fh File handle as returned by mpi_mod_file_open_*. [in] x Real number to write.

◆ mpi_mod_finalize()

| subroutine mpi_gbl::mpi_mod_finalize |

Terminates the MPI session and stops the program. It is a blocking routine.

◆ mpi_mod_free_block_array()

| subroutine mpi_gbl::mpi_mod_free_block_array | ( | type(mpiblockarray), intent(inout) | this | ) |

Release MPI handle of the block array type.

- Date

- 2019

This is a destructor for MPIBlockArray. It frees the handle for the MPI derived type (if any).

◆ mpi_mod_gatherv_int32()

| subroutine mpi_gbl::mpi_mod_gatherv_int32 | ( | integer(int32), dimension(:), intent(in) | sendbuf, |

| integer(int32), dimension(:), intent(out) | recvbuf, | ||

| integer(mpiint), dimension(:), intent(in) | recvcounts, | ||

| integer(mpiint), dimension(:), intent(in) | displs, | ||

| integer(mpiint), intent(in) | to, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

Gather for 32bit integer array send from every process and received on a given rank.

◆ mpi_mod_gatherv_int64()

| subroutine mpi_gbl::mpi_mod_gatherv_int64 | ( | integer(int64), dimension(:), intent(in) | sendbuf, |

| integer(int64), dimension(:), intent(out) | recvbuf, | ||

| integer(mpiint), dimension(:), intent(in) | recvcounts, | ||

| integer(mpiint), dimension(:), intent(in) | displs, | ||

| integer(mpiint), intent(in) | to, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

Gather for 64bit integer array send from every process and received on a given rank.

◆ mpi_mod_gatherv_real128()

| subroutine mpi_gbl::mpi_mod_gatherv_real128 | ( | real(real128), dimension(:), intent(in) | sendbuf, |

| real(real128), dimension(:), intent(out) | recvbuf, | ||

| integer(mpiint), dimension(:), intent(in) | recvcounts, | ||

| integer(mpiint), dimension(:), intent(in) | displs, | ||

| integer(mpiint), intent(in) | to, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

Gather for 128bit float array send from every process and received on a given rank.

◆ mpi_mod_gatherv_real64()

| subroutine mpi_gbl::mpi_mod_gatherv_real64 | ( | real(real64), dimension(:), intent(in) | sendbuf, |

| real(real64), dimension(:), intent(out) | recvbuf, | ||

| integer(mpiint), dimension(:), intent(in) | recvcounts, | ||

| integer(mpiint), dimension(:), intent(in) | displs, | ||

| integer(mpiint), intent(in) | to, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

Gather for 64bit float array send from every process and received on a given rank.

◆ mpi_mod_isend_int32_array()

| subroutine mpi_gbl::mpi_mod_isend_int32_array | ( | integer(mpiint), intent(in) | to, |

| integer(int32), dimension(:), intent(in) | buffer, | ||

| integer, intent(in) | tag, | ||

| integer, intent(in) | n, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_isend_int64_array()

| subroutine mpi_gbl::mpi_mod_isend_int64_array | ( | integer(mpiint), intent(in) | to, |

| integer(int64), dimension(:), intent(in) | buffer, | ||

| integer, intent(in) | tag, | ||

| integer, intent(in) | n, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_print_info()

| subroutine mpi_gbl::mpi_mod_print_info | ( | integer, intent(in) | u | ) |

Display information about current MPI setup.

- Date

- 2014 - 2019

Originally part of mpi_mod_start, but separated for better application-level control of output from the library.

- Parameters

-

[in] u Unit for the text output (mostly stdout).

◆ mpi_mod_rank()

| subroutine mpi_gbl::mpi_mod_rank | ( | integer(mpiint), intent(out) | rank, |

| integer(mpiint), intent(in), optional | comm | ||

| ) |

Wrapper around MPI_Comm_rank.

- Date

- 2019

Wrapper around a standard MPI rank query which returns zero when compiled without MPI.

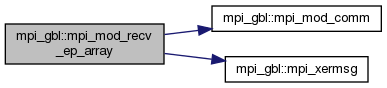

◆ mpi_mod_recv_ep_array()

| subroutine mpi_gbl::mpi_mod_recv_ep_array | ( | integer(mpiint), intent(in) | from, |

| integer, intent(in) | tag, | ||

| real(ep), dimension(:), intent(out) | buffer, | ||

| integer, intent(in) | n, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

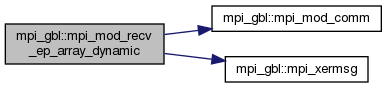

◆ mpi_mod_recv_ep_array_dynamic()

| subroutine mpi_gbl::mpi_mod_recv_ep_array_dynamic | ( | integer(mpiint), intent(in) | from, |

| integer, intent(in) | tag, | ||

| real(ep), dimension(:), intent(out), allocatable | buffer, | ||

| integer, intent(out) | n, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

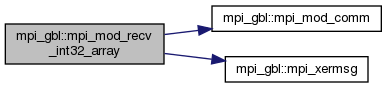

◆ mpi_mod_recv_int32_array()

| subroutine mpi_gbl::mpi_mod_recv_int32_array | ( | integer(mpiint), intent(in) | from, |

| integer, intent(in) | tag, | ||

| integer(int32), dimension(:), intent(out) | buffer, | ||

| integer, intent(in) | n, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_recv_int64_array()

| subroutine mpi_gbl::mpi_mod_recv_int64_array | ( | integer(mpiint), intent(in) | from, |

| integer, intent(in) | tag, | ||

| integer(int64), dimension(:), intent(out) | buffer, | ||

| integer, intent(in) | n, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_recv_wp_array()

| subroutine mpi_gbl::mpi_mod_recv_wp_array | ( | integer(mpiint), intent(in) | from, |

| integer, intent(in) | tag, | ||

| real(wp), dimension(:), intent(out) | buffer, | ||

| integer, intent(in) | n, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_recv_wp_array_dynamic()

| subroutine mpi_gbl::mpi_mod_recv_wp_array_dynamic | ( | integer(mpiint), intent(in) | from, |

| integer, intent(in) | tag, | ||

| real(wp), dimension(:), intent(out), allocatable | buffer, | ||

| integer, intent(out) | n, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_rotate_arrays_around_ring_ep()

| subroutine mpi_gbl::mpi_mod_rotate_arrays_around_ring_ep | ( | integer(longint), intent(inout) | elem_count, |

| integer(longint), dimension(:), intent(inout) | int_array, | ||

| real(ep), dimension(:), intent(inout) | ep_array, | ||

| integer(longint), intent(in) | max_num_elements, | ||

| integer(mpiint), intent(in), optional | comm_opt | ||

| ) |

This will rotate a combination of the number of elements, an integer array and float array once around in a ring formation.

◆ mpi_mod_rotate_arrays_around_ring_wp()

| subroutine mpi_gbl::mpi_mod_rotate_arrays_around_ring_wp | ( | integer(longint), intent(inout) | elem_count, |

| integer(longint), dimension(:), intent(inout) | int_array, | ||

| real(wp), dimension(:), intent(inout) | wp_array, | ||

| integer(longint), intent(in) | max_num_elements, | ||

| integer(mpiint), intent(in), optional | comm_opt | ||

| ) |

This will rotate a combination of the number of elements, an integer array and float array once around in a ring formation.

◆ mpi_mod_rotate_ep_arrays_around_ring()

| subroutine mpi_gbl::mpi_mod_rotate_ep_arrays_around_ring | ( | integer(longint), intent(inout) | elem_count, |

| real(ep), dimension(:), intent(inout) | ep_array, | ||

| integer(longint), intent(in) | max_num_elements, | ||

| integer(mpiint), intent(in), optional | communicator | ||

| ) |

This will rotate a combination of the number of elements, an integer array and float array once around in a ring formation.

◆ mpi_mod_rotate_int_arrays_around_ring()

| subroutine mpi_gbl::mpi_mod_rotate_int_arrays_around_ring | ( | integer(longint), intent(inout) | elem_count, |

| integer(longint), dimension(:), intent(inout) | int_array, | ||

| integer(longint), intent(in) | max_num_elements, | ||

| integer(mpiint), intent(in), optional | communicator | ||

| ) |

This will rotate a combination of the number of elements, an integer array and float array once around in a ring formation.

◆ mpi_mod_rotate_wp_arrays_around_ring()

| subroutine mpi_gbl::mpi_mod_rotate_wp_arrays_around_ring | ( | integer(longint), intent(inout) | elem_count, |

| real(wp), dimension(:), intent(inout) | wp_array, | ||

| integer(longint), intent(in) | max_num_elements, | ||

| integer(mpiint), intent(in), optional | communicator | ||

| ) |

This will rotate a combination of the number of elements, an integer array and float array once around in a ring formation.

◆ mpi_mod_scan_sum_int32()

| subroutine mpi_gbl::mpi_mod_scan_sum_int32 | ( | integer(int32), intent(in) | src, |

| integer(int32), intent(inout) | dst, | ||

| integer(mpiint), intent(in), optional | comm_opt | ||

| ) |

Wrapper aroung MPI_Scan.

- Date

- 2019

Calls MPI_Scan for 32-bit scalar integer with operation MPI_SUM.

◆ mpi_mod_scan_sum_int64()

| subroutine mpi_gbl::mpi_mod_scan_sum_int64 | ( | integer(int64), intent(in) | src, |

| integer(int64), intent(inout) | dst, | ||

| integer(mpiint), intent(in), optional | comm_opt | ||

| ) |

Wrapper aroung MPI_Scan.

- Date

- 2019

Calls MPI_Scan for 64-bit scalar initeger with operation MPI_SUM.

◆ mpi_mod_send_ep_array()

| subroutine mpi_gbl::mpi_mod_send_ep_array | ( | integer(mpiint), intent(in) | to, |

| real(ep), dimension(:), intent(in) | buffer, | ||

| integer, intent(in) | tag, | ||

| integer, intent(in) | n, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_send_ep_array_dynamic()

| subroutine mpi_gbl::mpi_mod_send_ep_array_dynamic | ( | integer(mpiint), intent(in) | to, |

| real(ep), dimension(:), intent(in) | buffer, | ||

| integer, intent(in) | tag, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_send_int32_array()

| subroutine mpi_gbl::mpi_mod_send_int32_array | ( | integer(mpiint), intent(in) | to, |

| integer(int32), dimension(:), intent(in) | buffer, | ||

| integer, intent(in) | tag, | ||

| integer, intent(in) | n, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_send_int64_array()

| subroutine mpi_gbl::mpi_mod_send_int64_array | ( | integer(mpiint), intent(in) | to, |

| integer(int64), dimension(:), intent(in) | buffer, | ||

| integer, intent(in) | tag, | ||

| integer, intent(in) | n, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_send_wp_array()

| subroutine mpi_gbl::mpi_mod_send_wp_array | ( | integer(mpiint), intent(in) | to, |

| real(wp), dimension(:), intent(in) | buffer, | ||

| integer, intent(in) | tag, | ||

| integer, intent(in) | n, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_send_wp_array_dynamic()

| subroutine mpi_gbl::mpi_mod_send_wp_array_dynamic | ( | integer(mpiint), intent(in) | to, |

| real(wp), dimension(:), intent(in) | buffer, | ||

| integer, intent(in) | tag, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_mod_start()

| subroutine mpi_gbl::mpi_mod_start | ( | logical, intent(in), optional | do_stdout, |

| logical, intent(in), optional | allow_shared_memory | ||

| ) |

Initializes MPI, assigns the rank for each process and finds out how many processors we're using. It also maps the current floating point precision (kind=cfp) to the corresponding MPI numeric type. This routine also sets the unit for standard output that each rank will use (the value of stdout). In case of serial run the value stdout is not set here and is left to the default value input_unit as specified in the module const.

- Warning

- This must the first statement in every level3 program.

◆ mpi_mod_wtime()

| real(wp) function mpi_gbl::mpi_mod_wtime |

Interface to the routine MPI_BARRIER.

◆ mpi_reduce_inplace_sum_ep()

| subroutine mpi_gbl::mpi_reduce_inplace_sum_ep | ( | real(kind=ep), dimension(:), intent(inout) | buffer, |

| integer, intent(in) | nelem | ||

| ) |

◆ mpi_reduce_inplace_sum_wp()

| subroutine mpi_gbl::mpi_reduce_inplace_sum_wp | ( | real(kind=wp), dimension(:), intent(inout) | buffer, |

| integer, intent(in) | nelem | ||

| ) |

◆ mpi_reduce_sum_ep()

| subroutine mpi_gbl::mpi_reduce_sum_ep | ( | real(kind=ep), dimension(:), intent(in) | src, |

| real(kind=ep), dimension(:), intent(in) | dest, | ||

| integer, intent(in) | nelem | ||

| ) |

◆ mpi_reduce_sum_wp()

| subroutine mpi_gbl::mpi_reduce_sum_wp | ( | real(kind=wp), dimension(:), intent(in) | src, |

| real(kind=wp), dimension(:), intent(in) | dest, | ||

| integer, intent(in) | nelem | ||

| ) |

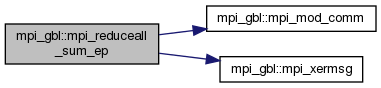

◆ mpi_reduceall_inplace_sum_ep()

| subroutine mpi_gbl::mpi_reduceall_inplace_sum_ep | ( | real(kind=ep), dimension(:), intent(inout) | buffer, |

| integer, intent(in) | nelem, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

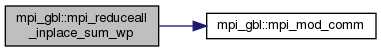

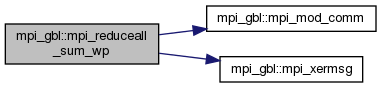

◆ mpi_reduceall_inplace_sum_wp()

| subroutine mpi_gbl::mpi_reduceall_inplace_sum_wp | ( | real(kind=wp), dimension(:), intent(inout) | buffer, |

| integer, intent(in) | nelem, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_reduceall_max_int32()

| subroutine mpi_gbl::mpi_reduceall_max_int32 | ( | integer(int32), intent(in) | src, |

| integer(int32), intent(inout) | dest, | ||

| integer(mpiint), intent(in), optional | comm_opt | ||

| ) |

Choose largest elements among processes from 32-bit integer arrays.

- Date

- 2017 - 2020

◆ mpi_reduceall_max_int64()

| subroutine mpi_gbl::mpi_reduceall_max_int64 | ( | integer(int64), intent(in) | src, |

| integer(int64), intent(inout) | dest, | ||

| integer(mpiint), intent(in), optional | comm_opt | ||

| ) |

Choose largest elements among processes from 64-bit integer arrays.

- Date

- 2017 - 2020

◆ mpi_reduceall_min_int32()

| subroutine mpi_gbl::mpi_reduceall_min_int32 | ( | integer(int32), intent(in) | src, |

| integer(int32), intent(inout) | dest, | ||

| integer(mpiint), intent(in), optional | comm_opt | ||

| ) |

Choose smallest elements among processes from 32-bit integer arrays.

- Date

- 2017 - 2020

◆ mpi_reduceall_min_int64()

| subroutine mpi_gbl::mpi_reduceall_min_int64 | ( | integer(int64), intent(in) | src, |

| integer(int64), intent(inout) | dest, | ||

| integer(mpiint), intent(in), optional | comm_opt | ||

| ) |

Choose smallest elements among processes from 64-bit integer arrays.

- Date

- 2017 - 2020

◆ mpi_reduceall_sum_ep()

| subroutine mpi_gbl::mpi_reduceall_sum_ep | ( | real(kind=ep), dimension(:), intent(in) | src, |

| real(kind=ep), dimension(:), intent(in) | dest, | ||

| integer, intent(in) | nelem, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_reduceall_sum_wp()

| subroutine mpi_gbl::mpi_reduceall_sum_wp | ( | real(kind=wp), dimension(:), intent(in) | src, |

| real(kind=wp), dimension(:), intent(in) | dest, | ||

| integer, intent(in) | nelem, | ||

| integer(mpiint), intent(in), optional | comm | ||

| ) |

◆ mpi_xermsg()

| subroutine mpi_gbl::mpi_xermsg | ( | character(len=*), intent(in) | mod_name, |

| character(len=*), intent(in) | routine_name, | ||

| character(len=*), intent(in) | err_msg, | ||

| integer, intent(in) | err, | ||

| integer, intent(in) | level | ||

| ) |

Analogue of the xermsg routine. This routine uses xermsg to first print out the error message and then either aborts the program or exits the routine depending on the level of the message (warning/error) which is defined in the same way as in xermsg.

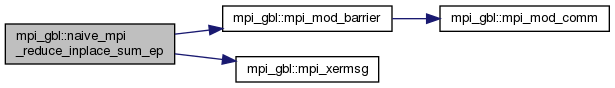

◆ naive_mpi_reduce_inplace_sum_ep()

| subroutine mpi_gbl::naive_mpi_reduce_inplace_sum_ep | ( | real(kind=ep), dimension(:), intent(inout) | buffer, |

| integer, intent(in) | nelem, | ||

| logical, intent(in) | split | ||

| ) |

◆ naive_mpi_reduce_inplace_sum_wp()

| subroutine mpi_gbl::naive_mpi_reduce_inplace_sum_wp | ( | real(kind=wp), dimension(:), intent(inout) | buffer, |

| integer, intent(in) | nelem, | ||

| logical, intent(in) | split | ||

| ) |

◆ setup_intranode_local_mem_communicator()

| subroutine mpi_gbl::setup_intranode_local_mem_communicator |

It is not used anywhere in the code yet but it might become useful later on. It creates intra-node communicators without the memory sharing capability.

◆ strings_are_same()

| logical function mpi_gbl::strings_are_same | ( | character(len=length), intent(in) | str1, |

| character(len=length), intent(in) | str2, | ||

| integer, intent(in) | length | ||

| ) |

Variable Documentation

◆ local_nprocs

| integer(kind=mpiint), public, protected mpi_gbl::local_nprocs = 1 |

Total number of processes on the node this task is bound to. Set by mpi_mod_start.

◆ local_rank

| integer(kind=mpiint), public, protected mpi_gbl::local_rank = 0 |

The local process number (rank) on the node this task is bound to. Set by mpi_mod_start.

◆ master

| integer(kind=mpiint), parameter, public mpi_gbl::master = 0 |

ID of the master process.

- Warning

- This must be set to 0 so don't change it.

◆ max_data_count

| integer, public, protected mpi_gbl::max_data_count = huge(dummy_32bit_integer) |

Largest integer (e.g. number of elements) supported by the standard MPI 32-bit integer API.

◆ mpi_running

| logical, public, protected mpi_gbl::mpi_running = .false. |

This can be set only once at the beginning of the program run by a call to MPI_MOD_START. The value of this variable is accessed by MPI_MOD_CHECK which aborts the program if MPI is not running. This variable stays .false. if the library was compiled without MPI support.

◆ mpi_started

| logical, public, protected mpi_gbl::mpi_started = .false. |

This is similar to mpi_running, but will be always set to true once MPI_MOD_START is called, whether or not the library was built with MPI support. So, while mpi_running can be used to determine whether the program is running under MPI, the variable mpi_started indicates whether the call to MPI_MOD_START had been already done (even in serial build).

◆ mpiaddr

| integer, parameter, public mpi_gbl::mpiaddr = longint |

◆ mpicnt

| integer, parameter, public mpi_gbl::mpicnt = longint |

◆ mpiint

| integer, parameter, public mpi_gbl::mpiint = kind(0) |

◆ mpiofs

| integer, parameter, public mpi_gbl::mpiofs = longint |

◆ myrank

| integer(kind=mpiint), public, protected mpi_gbl::myrank = -1 |

The local process number (rank). Set by mpi_mod_start.

◆ nprocs

| integer(kind=mpiint), public, protected mpi_gbl::nprocs = -1 |

Total number of processes. Set by mpi_mod_start.

◆ procname

| character(len=*), parameter, public, protected mpi_gbl::procname = "N/A" |

Name of the processor on which the current process is running.

◆ shared_communicator

| integer(kind=mpiint), public, protected mpi_gbl::shared_communicator = -1 |

Intra-node communicator created by mpi_mod_start.

◆ shared_enabled

| logical, public, protected mpi_gbl::shared_enabled = .false. |

The routine mpi_start creates the shared_communicator which groups all tasks sitting on the same node. If we're using MPI 3.0 standard the shared_communicator enables creation of shared memory areas. In this case shared_enabled is set to .true. If we're not using MPI 3.0 then shared_enabled is set to .false.